Monitoring Cyclomatic Complexity

From NeoWiki

m (→A sea of numbers) |

m (→Measuring path complexity) |

||

| Line 87: | Line 87: | ||

'''Listing 2. PathCoverage has a defect!''' | '''Listing 2. PathCoverage has a defect!''' | ||

| + | public class PathCoverage { | ||

| + | public String pathExample(boolean condition){ | ||

| + | String value = null; | ||

| + | if(condition){ | ||

| + | value = " " + condition + " "; | ||

| + | } | ||

| + | return value.trim(); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | In response, I can write one test, which achieves 100 percent line coverage: | ||

| + | |||

| + | '''Listing 3. One test yields full coverage!''' | ||

| + | import junit.framework.TestCase; | ||

| + | |||

| + | public class PathCoverageTest extends TestCase { | ||

| + | public final void testPathExample() { | ||

| + | PathCoverage clzzUnderTst = new PathCoverage(); | ||

| + | String value = clzzUnderTst.pathExample(true); | ||

| + | assertEquals("should be true", "true", value); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | Next, I run a code coverage tool, such as Cobertura, and get the report shown in Figure 1: | ||

| + | |||

| + | '''Figure 1. Cobertura reports'''<br /> | ||

| + | [[Image:Cobertura_Reports.jpg]] | ||

| + | |||

| + | Well, that's disappointing. The code coverage report indicates 100 percent coverage; however, we know this is misleading. | ||

| + | |||

| + | ===Two for two=== | ||

| + | |||

| + | Note that the pathExample() method in Listing 2 has a CC of 2 (one for the default path and one for the if path). Using CC as a more precise gauge of coverage implies a second test case is required. In this case, it would be the path taken by not going into the if condition, as shown by the testPathExampleFalse() method in Listing 4: | ||

| + | |||

| + | '''Listing 4. Down the path less taken''' | ||

| + | import junit.framework.TestCase; | ||

| + | |||

| + | public class PathCoverageTest extends TestCase { | ||

| + | |||

| + | public final void testPathExample() { | ||

| + | PathCoverage clzzUnderTst = new PathCoverage(); | ||

| + | String value = clzzUnderTst.pathExample(true); | ||

| + | assertEquals("should be true", "true", value); | ||

| + | } | ||

| + | |||

| + | public final void testPathExampleFalse() { | ||

| + | PathCoverage clzzUnderTst = new PathCoverage(); | ||

| + | String value = clzzUnderTst.pathExample(false); | ||

| + | assertEquals("should be false", "false", value); | ||

| + | } | ||

| + | } | ||

| + | |||

| + | As you can see, running this new test case yields a nasty NullPointerException. What's interesting here is that we were able to spot this defect using cyclomatic complexity rather than code coverage. Code coverage indicated we were done after one test case, but CC forced us to write an additional one. Not bad, eh? | ||

| + | |||

| + | ==CC on the charts== | ||

| + | |||

| + | A few open source tools available to Java developers can report on cyclomatic complexity. One such tool is JavaNCSS, which determines the length of methods and classes by examining Java source files. What's more, this tool also gathers the cyclomatic complexity of every method in a code base. By configuring JavaNCSS either through its Ant task or through a Maven plug-in, you can generate an XML report that lists the following: | ||

==Resources== | ==Resources== | ||

Revision as of 08:54, 3 March 2007

- What to do when code complexity is off the charts

Andrew Glover, President, Stelligent Incorporated

28 Mar 2006

- If complexity has been shown to correlate to defects, doesn't it make sense to monitor your code base's complexity values? Andrew Glover shows you how to use simple code metrics and Java™-based tools to monitor cyclomatic complexity.

Every developer has an opinion about what code quality means and most have ideas about how to spot poorly written code. Even the term code smell has entered the collective vocabulary as a way to describe code in need of improvement.

One code smell that usually divides straight down the line with developers, interestingly, is the smell of too many code comments. Some claim judicious code commenting is a good thing, while others claim it only serves as a mechanism to explain overly complex code. Clearly, Javadocs™ serve a useful purpose, but how many inline comments are adequate to maintain code? If the code is written well enough, shouldn't it explain itself?

What this tells us about code smell as a mechanism for evaluating code is that it's subjective. What I might deem as terribly smelly code could be the finest piece of work someone else has ever written. Do the following phrases sound familiar?

- Sure, it's a bit confusing (at first), but look how extensible it is!!

or

- It's confusing to you because you obviously don't understand patterns.

What we need is a means to objectively evaluate code quality, something that can tell us, definitively, that the code we're looking at is risky. Believe it or not, that something exists! The mechanisms for objectively evaluating code quality have been around for quite a while, it's just that most developers ignore them. They're called code metrics.

Contents |

A history of code metrics

Decades ago, a few super smart people began studying code hoping to define a system of measurements that could correlate to defects. This was an interesting proposition: by studying patterns in buggy code, they hoped to create formal models that could then be evaluated to catch defects before they became defects.

Somewhere along the way, some other super smart people also decided to see if, by studying code, they could measure developer productivity. The classic metric of lines of code per developer seemed fair enough on the surface:

- Joe produces more code than Bill; therefore, Joe is more productive and worth every penny we pay him. Plus, I noticed Bill hangs out at the water cooler a lot. I think we should fire Bill.

But this productivity metric was a spectacular disappointment in practice, mostly because it was easily abused. Some code measurement included in-line comments, and the metric actually favored cut-and-paste style development.

- Joe wrote a lot of defects! Every other defect is assigned to him. It's too bad we fired Bill -- his code is practically defect free.

Predictably, the productivity studies proved wildly inaccurate, but not before the metrics were widely used by a management body eager to account for the value of each individual's abilities. The bitter reaction from the developer community was justifiable, and for some, the hard feelings have never really gone away.

Diamonds in the rough

Despite these failures, there were some gems in those complexity-to-defect correlation studies. Most developers have long since forgotten them, but for those who go digging -- especially if you're digging in pursuit of code quality -- there is value to be found in applying them today. For example, have you ever noticed that long methods are sometimes hard to follow? Ever had trouble understanding the logic in an excessively deep nested conditional? Your instinct for eschewing such code is correct. Long methods and methods with a high number of paths are hard to understand and, interestingly, tend to correlate to defects.

I'll use some examples to show you what I mean.

A sea of numbers

Studies have shown that the average person has the capacity to handle about seven pieces of data in her or his head, plus or minus two. That is why most people can easily remember phone numbers but have a more difficult time memorizing credit card numbers, launch sequences, and other number sequences higher than seven.

This principle also applies to understanding code. You've probably seen a snippet of code like the one in Listing 1 before:

Listing 1. Numbers at work

if (entityImplVO != null) {

List actions = entityImplVO.getEntities();

if (actions == null) {

actions = new ArrayList();

}

Iterator enItr = actions.iterator();

while (enItr.hasNext()) {

entityResultValueObject arVO = (entityResultValueObject) actionItr.next();

Float entityResult = arVO.getActionResultID();

if (assocPersonEventList.contains(actionResult)) {

assocPersonFlag = true;

}

if (arVL.getByName(

AppConstants.ENTITY_RESULT_DENIAL_OF_SERVICE).getID().equals(entityResult)) {

if (actionBasisId.equals(actionImplVO.getActionBasisID())) {

assocFlag = true;

}

}

if (arVL.getByName(AppConstants.ENTITY_RESULT_INVOL_SERVICE).getID().equals(entityResult)) {

if (!reasonId.equals(arVO.getStatusReasonID())) {

assocFlag = true;

}

}

}

} else {

entityImplVO = oldEntityImplVO;

}

Listing 1 shows up to nine different paths. The snippet is actually part of a 350-plus-line method that was shown to have 41 distinct paths. Imagine if you were tasked to modify this method for the purpose of adding a new feature. If you didn't write the method, do you think you could make the requisite changes without introducing a defect?

Of course, you'd write a test case, but do you think your test case could isolate your particular change in that sea of conditionals?

Measuring path complexity

Cyclomatic complexity, pioneered during those studies I previously mentioned, precisely measures path complexity. By counting the distinct paths through a method, this integer-based metric aptly depicts method complexity. In fact, various studies over the years have determined that methods having a cyclomatic complexity (or CC) greater than 10 have a higher risk of defects. Because CC represents the paths through a method, this is an excellent number for determining how many test cases will be required to reach 100 percent coverage of a method. For example, the following code (which you might remember from the first article in this series) includes a logical defect:

Listing 2. PathCoverage has a defect!

public class PathCoverage {

public String pathExample(boolean condition){

String value = null;

if(condition){

value = " " + condition + " ";

}

return value.trim();

}

}

In response, I can write one test, which achieves 100 percent line coverage:

Listing 3. One test yields full coverage!

import junit.framework.TestCase;

public class PathCoverageTest extends TestCase {

public final void testPathExample() {

PathCoverage clzzUnderTst = new PathCoverage();

String value = clzzUnderTst.pathExample(true);

assertEquals("should be true", "true", value);

}

}

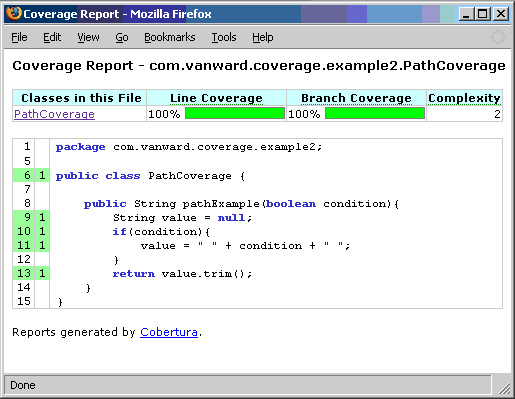

Next, I run a code coverage tool, such as Cobertura, and get the report shown in Figure 1:

Well, that's disappointing. The code coverage report indicates 100 percent coverage; however, we know this is misleading.

Two for two

Note that the pathExample() method in Listing 2 has a CC of 2 (one for the default path and one for the if path). Using CC as a more precise gauge of coverage implies a second test case is required. In this case, it would be the path taken by not going into the if condition, as shown by the testPathExampleFalse() method in Listing 4:

Listing 4. Down the path less taken

import junit.framework.TestCase;

public class PathCoverageTest extends TestCase {

public final void testPathExample() {

PathCoverage clzzUnderTst = new PathCoverage();

String value = clzzUnderTst.pathExample(true);

assertEquals("should be true", "true", value);

}

public final void testPathExampleFalse() {

PathCoverage clzzUnderTst = new PathCoverage();

String value = clzzUnderTst.pathExample(false);

assertEquals("should be false", "false", value);

}

}

As you can see, running this new test case yields a nasty NullPointerException. What's interesting here is that we were able to spot this defect using cyclomatic complexity rather than code coverage. Code coverage indicated we were done after one test case, but CC forced us to write an additional one. Not bad, eh?

CC on the charts

A few open source tools available to Java developers can report on cyclomatic complexity. One such tool is JavaNCSS, which determines the length of methods and classes by examining Java source files. What's more, this tool also gathers the cyclomatic complexity of every method in a code base. By configuring JavaNCSS either through its Ant task or through a Maven plug-in, you can generate an XML report that lists the following:

Resources

- Learn

- "TestNG makes Java unit testing a breeze" (Filippo Diotalevi, developerWorks, January 2005): TestNG isn't just really powerful, innovative, extensible, and flexible; it also illustrates an interesting application of Java annotations.

- "An early look at JUnit 4" (Elliotte Rusty Harold , developerWorks, September 2005): Obsessive code tester Elliotte Harold takes JUnit 4 out for a spin and details how to use the new framework in your own work.

- Using JUnit extensions in TestNG (Andrew Glover, thediscoblog.com, March 2006): Just because a framework claims to be a JUnit extension doesn't mean it can't be used within TestNG.

- Statistical Testing with TestNG (Cedric Beust, beust.com, February 2006): Advanced testing with TestNG, written by the project's founder.

- "Rerunning of failed tests" (Andrew Glover, testearly.com, April 2006): A closer look at rerunning failed tests in TestNG.

- In pursuit of code quality: "Resolve to get FIT" (Andrew Glover, developerWorks, February 2006): The Framework for Integrated Tests facilitates communication between business clients and developers.

- JUnit 4 you (Fabiano Cruz, Fabiano Cruz's Blog, June 2006): An interesting entry on JUnit 4 ecosystem support.

- Code coverage of TestNG tests (Improve your code quality forum, March 2006): Join the discussion on integrating code coverage tools with TestNG.

- In pursuit of code quality series (Andrew Glover, developerWorks): See all the articles in this series ranging from code metrics to testing frameworks to refactoring.

- Get products and technologies

- Download TestNG: Take TestNG for a test drive.

- Download JUnit: Find out what's new with JUnit 4.

- Discuss

About the author

Andrew Glover is president of Stelligent Incorporated, which helps companies address software quality with effective developer testing strategies and continuous integration techniques that enable teams to monitor code quality early and often. He is the co-author of Java Testing Patterns (Wiley, September 2004).

- "When you have learned to snatch the error code from the trap frame, it will be time for you to leave.", thus spake the master programmer.